Pentagon grapples with growth of artificial intelligence. (Graphic by Breaking Defense, original brain graphic via Getty)

WASHINGTON — When pundits talk about military use of artificial intelligence, the phrase “human in the loop” is almost certain to come up. But a “human in the loop” isn’t defined in the official Pentagon policy, even after the policy was revised and expanded in January, nor in the months of internal refinement and international talks that followed.

Still in 2023 — the year ChatGPT rocketed AI into the international spotlight — the US government make a full-court push to define “responsible” military use of the technology on its own terms, and sell that concept to both the American public and world opinion.

The Pentagon wants to keep its options open, in part, because many systems already in use have had a fully automated option, which takes the human out of the loop, for decades — like the Army’s Patriot, the Navy’s Aegis and similar missile defenses in service around the world. It may also be because the US military has no desire to unilaterally disarm itself if a highly automated system proves lethally superior, at least in some scenarios, to one slowed down by a human decision-maker — as years of simulations and experiments with AI-controlled fighter jets already suggest.

[This article is one of many in a series in which Breaking Defense reporters look back on the most significant (and entertaining) news stories of 2023 and look forward to what 2024 may hold.]

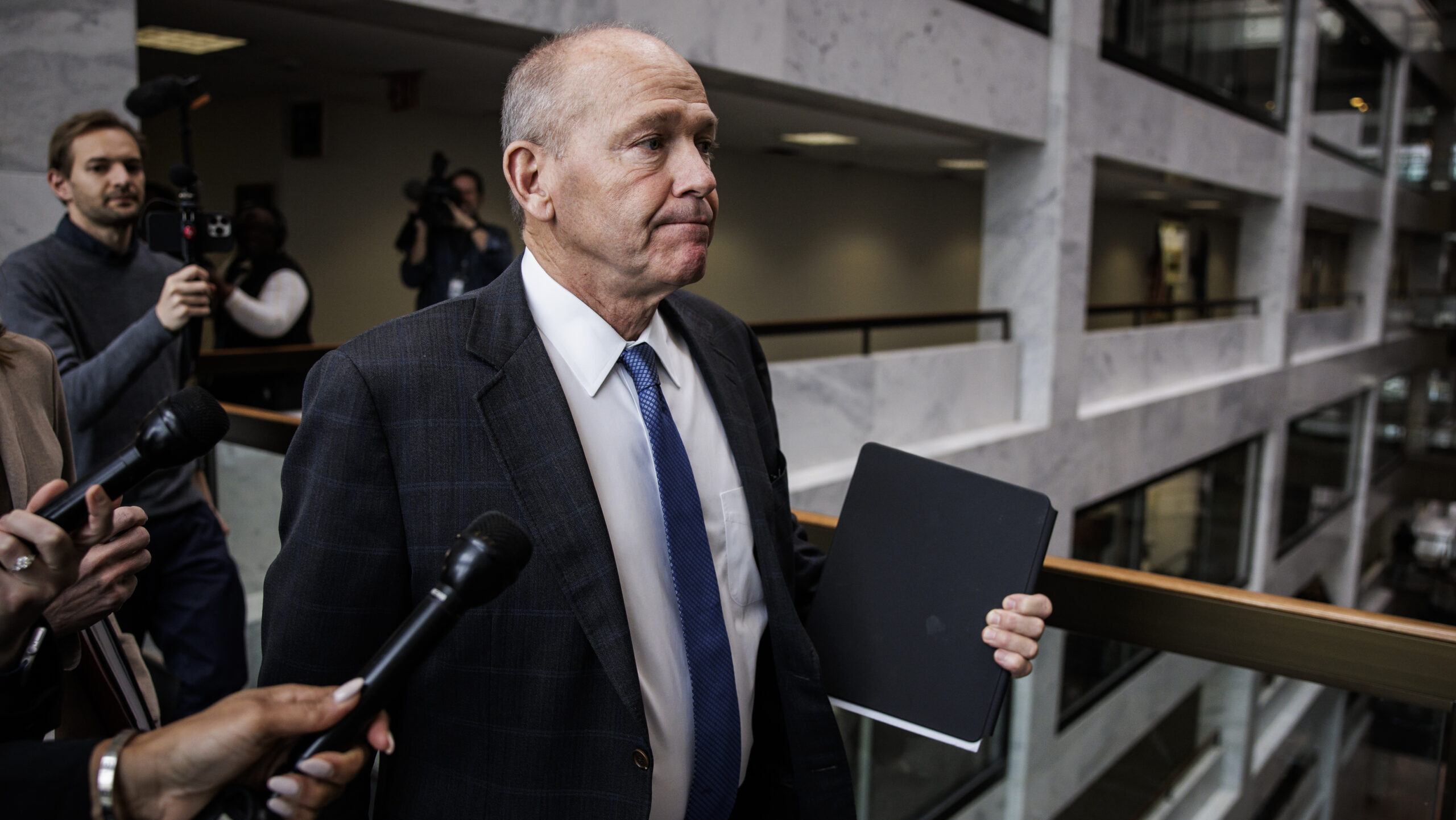

“I just got briefed by DARPA on some work that they’re doing on man versus unmanned combat [with] fighters,” said Frank Kendall, the Secretary of the Air Force, said at the Reagan National Defense Forum on Dec. 2. “The AI wins routinely, [because] the best pilot you’re ever going to find is going to take a few tenths of a second to do something — the AI is gonna do it in a microsecond.”

“You can have human supervision,” he said. “You can watch over what the AI is doing. [But] if the human being is in the loop, you will lose.”

RELATED: Should US Unleash War Robots? Frank Kendall Vs. Bob Work, Army

Yet Kendall isn’t some cackling Doctor Strangelove seeking to unleash the Terminator on the puny humans. He’s a human rights lawyer who’s served on the boards of Amnesty International and Human Rights First.

“I care a lot about civil society and the rule of law, including laws of armed conflict, and our policies are written around compliance with those laws,” he told the Reagan Forum. “[But] you don’t enforce laws against machines: You enforce them against people. And I think our challenge is not to somehow limit what we can do with AI, but it’s to find a way to hold people accountable for what the AI does.”

Kendall’s remarks aren’t just important because they show what the Secretary of the Air Force thinks. They also embody, with his distinctive bluntness, the fine line the entire US military is trying to walk between imposing ethical limits on the responsible use of AI and exploiting its war-winning potential to the fullest.

Walking A Fine Line in 2023

Kendall and his Pentagon colleagues were hardly unique in spending much of 2023 wrestling with the ethical and practical consequences of AI.

The European Union’s AI Act passed in early December, while President Joe Biden published an unprecedented executive order — the longest and most detailed EO in history — in October. But the Biden order focused on commercial and civil service AI, with relatively few provisions for the Pentagon and many provisions that actually applied defense innovations to other agencies, like having a designated chief AI officer and a formal AI strategy. That’s probably because the Defense Department was a leading agency on “responsible AI” and had worked hard on its own AI framework all year, with a significant assist from the State Department in signing up allied support.

The roots of this effort go back to the Trump administration. In 2019, the semi-independent Defense Innovation Advisory Board issued ten pages [PDF] of “AI Principles [for] the Ethical Use of Artificial Intelligence by the Department of Defense,” emphasizing that military AI must be “responsible,” “equitable,” “traceable,” “reliable” and “governable,” the last meaning both human oversight and AI self-diagnostics to stop the system running amok. A few weeks later, a bipartisan Congressional commission argued that autonomous weapons could be deployed in accordance military ethics and the law of war, declaring that “ethics and strategic necessity are compatible with one another.” Early the following year, then-Defense Secretary Mark Esper adopted many of these ideas in a formal set of ethical principles, with DoD later inviting over a dozen friendly nations to discuss them.

But it took until 2023 for the DoD to expand these principles into a comprehensive policy and implementation plan. What’s more, that policy carefully and deliberately left a pathway open for fully autonomous weapons, even as State managed to co-opt a UN resolution introduced by activists seeking a binding legal ban on such weapons.

The crucial step came in late January, with the long-awaited revision of the governing DoD Directive on Autonomy in Weapons Systems, DoDD 3000.09 [PDF]. While much longer and more detailed than the 2012 original, with a strong emphasis on implementing the 2020 ethical principles, the new 3000.09 retained a crucial provision allowing the deputy secretary to waive all its restrictions “in cases of urgent military need.”

The new 3000.09 also exempted “operator-supervised autonomous weapon systems used to select and engage materiel targets for local defense to intercept attempted time-critical or saturation attacks.” That section is written to cover defenses that require superhuman reaction speeds to shoot down fast and/or numerous incoming threats, from a Navy Aegis cruiser to an Army tank’s anti-antitank missile system.

Aegis, Patriot, and similar air defense systems like it have actually had a fully autonomous mode for decades, because Cold War commanders feared the Soviets would unleash too many bombers and missiles for humans to track, a fear now revived by China’s build up. While such autonomous defenses are focused on destroying missiles, they’re perfectly capable of shooting down manned aircraft as well. In fact, they’ve actually killed friendly aircrew by accident — for example, in 2003, when Patriot batteries were mistakenly switched to their Cold War fully automatic mode, twice.

It’s worth noting that not one of these systems is “artificial intelligence” in the modern sense of the word. They don’t use machine learning and neural nets to digest masses of data and then modify their own algorithms. They’re straightforward, deterministic IF-THEN codes of the kind in use since the days of punch cards and vacuum tubes.

A Romanian HAWK air defense battery opens fire during a NATO training exercise in 2017. (US Army photo by Pfc. Nicholas Vidro)

“As lieutenant, I was operating a HAWK air defense unit in Europe,” Kendall recalled. “I had a switch on my console, it said ‘automatic,’ and I could have put the switch in that position and just sat there and watched this shoot down airplanes.”

“The data inputs that were necessary to enable that were pretty straightforward,” he said. “Which direction is the airplane that your radar’s tracking going in? How fast is it going? What altitude is it at, and is it sending any IFF, Identification Friend or Foe, signal? … That was in 1973. We are infinitely better than that now.”

Modern machine-learning AI can sort through much larger and more complicated datasets, which makes it increasingly possible for an automated system to target not just missiles and jets in the empty air, but ground vehicles partially hidden by terrain, or even human beings under cover. The most plausible nightmare scenario of the arms control activists and AI doomsayers is not some bipedal killbot with an Austrian accent, but a swarm of kamikaze mini-drones, each with just enough intelligence — and explosives — to track down an individual human. But UN arms control negotiations in Geneva have been stalled for almost a decade over how to define such “lethal autonomous weapons systems.”

In February, the State Department’s ambassador at large for arms control, Bonnie Jenkins, went to an international conference on military AI in the Hague and issued a formal Political Declaration on Responsible Military Use of Artificial Intelligence and Autonomy. Largely derived from the DoD policies released in January, the US Political Declaration has won official endorsement from 46 other nations, from core allies like Australia, Britain, France, Germany and Japan to minor neutrals like Malta, Montenegro and Morocco.

The UN General Assembly vote in favor of studying “lethal autonomous weapons systems.” (Image via Stop Killer Robots)

In the following months, while State racked up more countries’ endorsements of the Political Declaration, DoD kept developing its policy. June saw a conference of over a hundred senior officials and outside experts and the release of an in-depth Responsible AI Strategy & Implementation Pathway [PDF] detailing 64 “lines of effort,” which were then encapsulated in a handy online guide to help baffled bureaucrats actually execute it. Biden even got China to agree to vaguely defined discussions on “risk and safety issues associated with artificial intelligence” at his November summit with Xi Jinping, with experts suggesting the first top would be ensuring human control of the highly sensitive command-and-control systems for nuclear weapons.

But even a ban on AI control of nukes — like the SkyNet nightmare straight out of the Terminator movies — would not restrict other applications of military AI, from personnel management to intelligence analysis to “lethal autonomous weapons systems.” The Pentagon strategy has been to codify how to make responsible and ethical use of AI across all those applications — not just the narrow use case of “killer robots” — while still keeping all its options open.

“I don’t see, any time soon, the Terminator or… the rogue robot that goes out there and runs around and shoots everything in sight indiscriminately,” Kendall said. “I think we can prevent that — but we are still going to have to find a way to manage this technology, manage its application, that holds human beings accountable for when it doesn’t comply with the rules that we already have.”